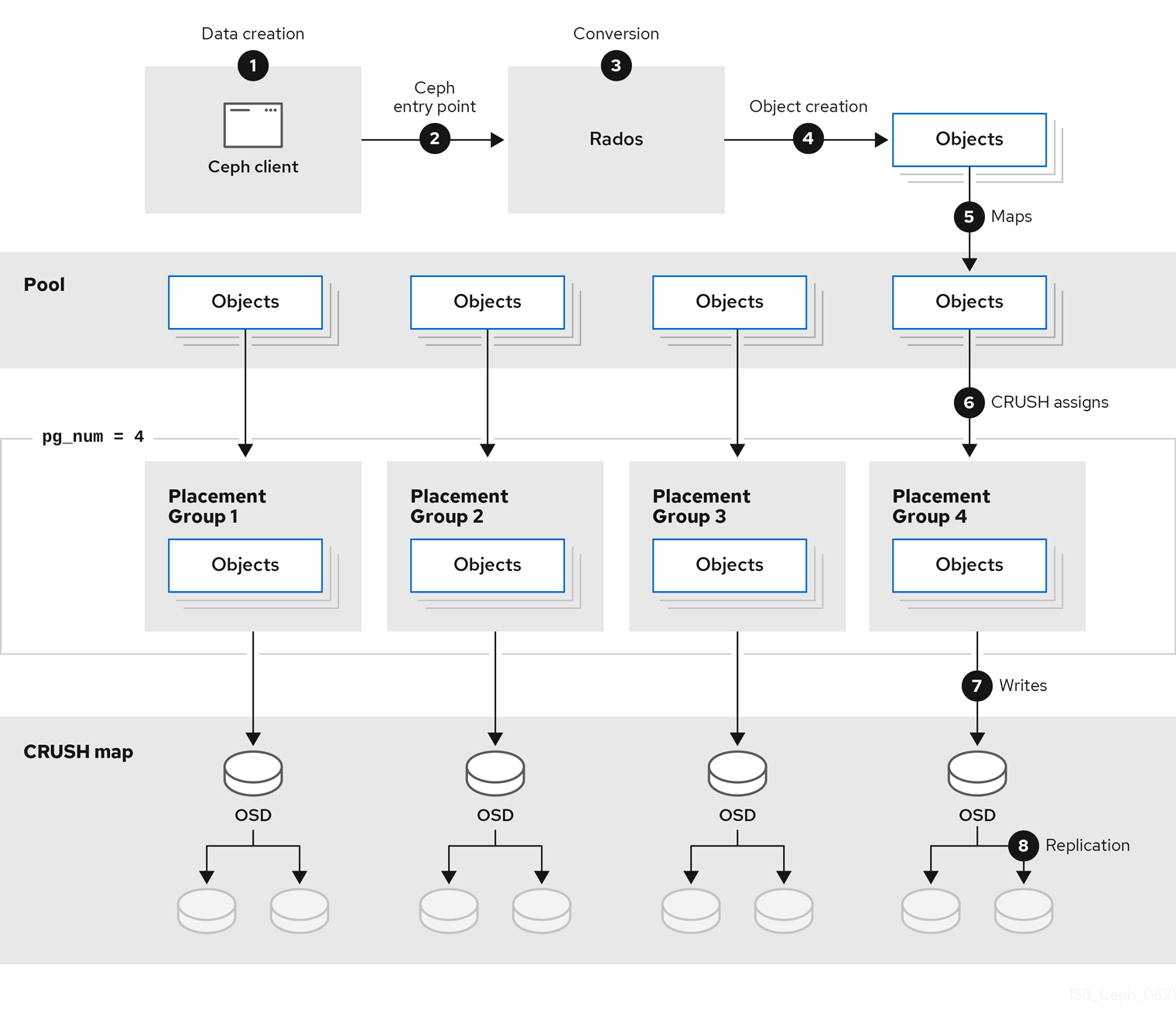

Marvell and Ingrasys Collaborate to Power Ceph Cluster with EBOF in Data Centers - Marvell Blog | We're Building the Future of Data Infrastructure

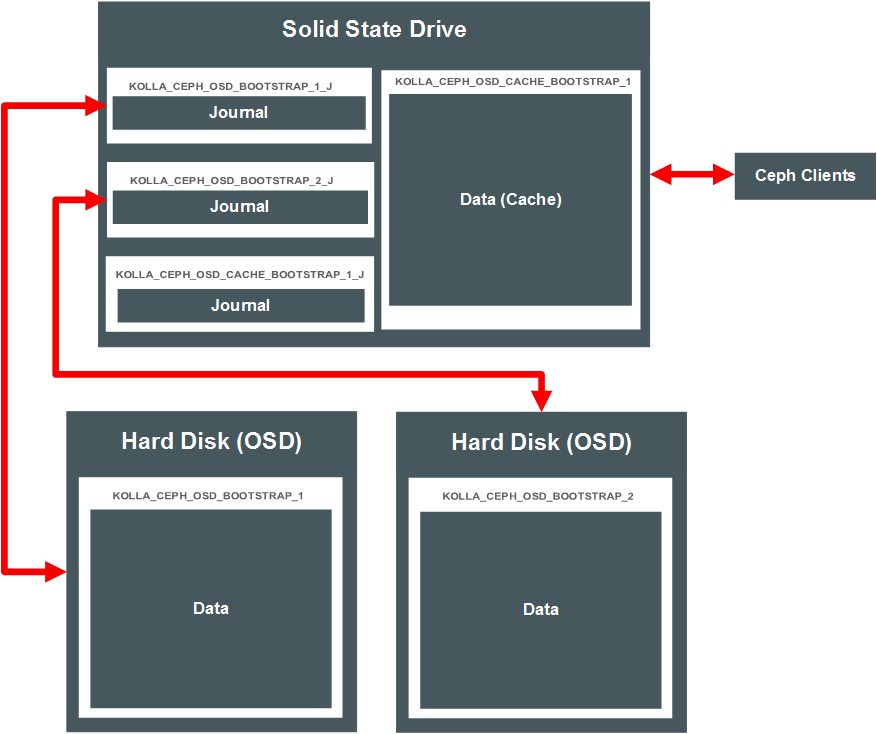

Chapter 6. Deploying second-tier Ceph storage on OpenStack Red Hat OpenStack Platform 15 | Red Hat Customer Portal

Marvell and Ingrasys Collaborate to Power Ceph Cluster with EBOF in Data Centers - Marvell Blog | We're Building the Future of Data Infrastructure